Pre-Crime Facial Recognition System Developed

By PNW Staff June 14, 2016

Share this article:

By PNW Staff June 14, 2016

Share this article:

One day next year, you try to board a plane but are stopped by

security. The automated facial recognition system has informed the

officials that you look like a terrorist and might pose a security risk.

A few days later, you walk into a bank to apply for a

mortgage, but are turned away before you can explain because once again

the automated facial analysis software the bank uses has determined you

have features associated with gamblers.

You

have no criminal record and your credit rating is fine, but that doesn't

matter to the software behind the computer system known as Faception.

Based

on an image, it matches facial features across its database to predict

behavior before it occurs and label you with such tags as pedophile,

terrorist, gambler or thief based solely on the geometry of your face.

The

Israeli startup Faception claims to already be in talks with the

Department of Homeland Security in the United States about employing its

system for which it has, according to marketing material, "built 15

different classifiers, including extrovert, genius, academic researcher,

professional poker players, bingo player, brand promoter, white collar

offender, pedophile and terrorist."

Faception

claims to have identified 9 of the 11 terrorists involved in the Paris

attacks when its criminal detection algorithms were applied to photos

after the attacks and the overall accuracy is estimated at 80% by the

company.

The system, it must be remembered,

does not perform facial recognition in the traditional sense, not

bothering to attach a name to a face.

Using

facial features to predict traits, it instead lies much closer to 19th

century theories of phrenology, a now debunked pseudoscience that used

the shape of the skull to predict a host of factors about a person's

mind and behavior.

While few would argue the

power of body language and facial expression to indicate our mood and

attention, alerting a security guard to a nervous commuter for example,

the notion that inherent criminality can be found in the shape of one's

eyes or the position of a nose is both deeply troubling and doubted by

most experts.

But just like no-fly lists that prevent toddlers who share

names with terrorists, let's not rest assured that truth, racism or

effectives will be an impediment to implementing Faception.

Yet

true facial recognition carries its own dangers as well. In the past

century, the average person enjoyed a measure of privacy through

obscurity. Walk down the street of a major city and there was little

chance of being recognized among thousands of anonymous faces.

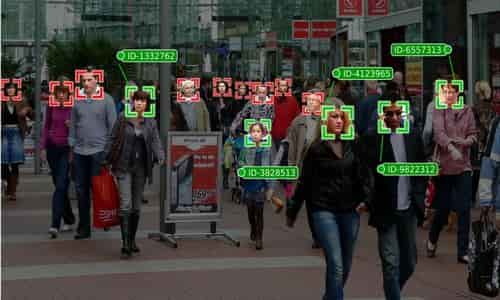

That

is all changing now. As facial recognition systems continue to be

refined and produced for smartphones, instant recognition of any face is

in the hands of the public at large not to mention tens of thousands of

CCTV cameras.

One such application is called

FindFace, an app developed by two Russian programmers that allows anyone

to use facial recognition software and their smartphone camera to

identify faces on the street and link each to a real name with 70%

accuracy.

The system cross references facial

geometry with photos found on social networks to produce name matches

for anyone passing on the street, sitting on a bus or entering a store.

It

is not hard to imagine uses for such a system for those who would like

to identify protestors, find the name of their next stalking victim or

perhaps ID a witness or an adult film actress.

If

the potential for abuse with FindFace and its link to social networks,

then consider the power of the UK site Facewatch, an online site that

acts as a public watch list for known face profiles and which has now

been integrated with facial recognition software, NEC's NeoFace, and

private surveillance cameras.

As reported

first by Ars Technica in 2015, the intention is for a shop owners to

place a thief or unruly customer's face on the public watch list which

will allow every security camera across the world to flag that

individual and deny him entry into other restaurants and stores.

What

could possibly go wrong with a crowd sourced black list for automated

social exclusion? Certainly a waiter who gets stiffed on a tip wouldn't

add your face to the list.

With any of the

proposed systems, there exists the danger of not only tearing away the

last layers of public privacy that remain but also of placing an

inordinate level of trust in the accuracy of those systems through a

phenomenon known as automation bias by which we tend to trust automatic

systems more than humans now.

Aside from the

dubious claims of predicting behavior simply on one's facial features, a

premise that appears on the surface to be little more than automating a

centuries old process of racial profiling, the rapid advances of facial

recognition could spell an end to any semblance of privacy in public.

Soon,

these systems promise, our faces will be automatically entered into

government, commercial and crowd sourced databases open to advanced

recognition algorithms.

No comments:

Post a Comment